TL;DR: AI agents fail because they search through outdated docs and blog posts. I deployed Ref (precision search for technical docs) on NimbleTools (MCP runtime) in 5 minutes. Result: 93% fewer tokens, current code every time, and no more debugging hallucinated AWS examples.

Last Tuesday, my AI coding assistant insisted this was the correct way to launch an EC2 instance:

# AI's suggestion - looks good, right?

ec2.run_instances(

ImageId='ami-12345678',

InstanceType='t3.micro',

MinCount=1,

MaxCount=1,

)

Except AWS now requires IMDSv2 metadata options for security compliance. This code would at best fail instantly, at worst, misconfigure my entire environment silently.

Three hours and 47 browser tabs later, I found the actual solution buried in AWS’s latest docs. My AI had been confidently wrong because it was searching through:

- 2019 blog posts (pre-IMDSv2)

- Outdated Stack Overflow answers

- Tutorials from AWS’s old documentation

- Everything except what I actually needed

That’s when I realized: My fancy RAG setup was just organizing garbage more efficiently.

What You’ll Learn

In the next 10 minutes, I’ll show you:

- Why even good RAG systems serve up outdated code

- How Ref solves this with AI-first documentation search

- The exact commands to deploy Ref on NimbleTools

- Real benchmarks: 47,000 tokens → 3,200 tokens for the same query

Let’s fix your AI agents.

The Problem We’re All Facing (But Nobody’s Fixing)

Here’s the thing… we’ve all built RAG systems. Vector databases, embeddings, the works. And they help! But here’s what I’d been missing: even the best RAG setup is only as good as what it’s searching through.

My RAG pipeline was beautiful. It was also retrieving:

- Outdated EC2 tutorials from 2019 (pre-IMDSv2)

- Blog posts with “close enough” code that actually wasn’t

- Stack Overflow answers that worked once upon a time

- Official docs… from three versions ago

I needed better search. That’s when I found Ref.

Ref: Search Built for How Developers Actually Work

Matt Dailey built Ref because he was tired of AI agents confidently serving up deprecated code. But here’s what caught my attention - he didn’t just build another search API.

Ref understands how developers actually need documentation:

- Version-aware: Knows when methods were deprecated or added

- Code-first: Returns executable snippets, not blog posts about concepts

- Authority-based: Prioritizes official sources over tutorials

- Structure-preserving: Maintains code formatting, comments, and context

But the real kicker? It’s built as an MCP server from day one.

What really sold me: their custom crawler is tuned specifically for technical documentation. When docs have those tabbed code examples (Python | Java | Node), Ref actually reads each tab separately. Ask for Python, get Python - not whatever tab happened to be visible when the crawler passed by.

Why This Matters for NimbleTools Users

At NimbleTools, we built a runtime for deploying MCP servers because we were sick of the DevOps overhead. When I discovered Ref was already MCP-compatible, it was a perfect match.

Here’s why this combination works so well:

- MCP makes integration trivial - No custom API wrappers or prompt engineering. Plus, Ref uses the full MCP spec to return rich, structured data - not just plain text.

- Ref returns structured data - Not just text dumps for your agent to parse

- NimbleTools handles the infrastructure - Deploy once, use everywhere

No partnership needed - just good tools that work well together.

How to Use Ref Inside NimbleTools

Here’s the fun part - getting it all working in under 5 minutes.

Step 1: Create a Workspace in NimbleTools

ntcli auth login

ntcli workspace create ref-demo

ntcli workspace use ref-demo

This keeps your Ref deployment isolated from other projects.

If you’re running on your private cloud (i.e. BYOC mode), this workspace can live entirely in your cloud account.

Step 2: Get Your Ref API Key

Head over to https://ref.tools and grab an API key. The onboarding is refreshingly simple and takes less than 30 seconds.

Step 3: Deploy Ref as an MCP Service

With your workspace, run:

ntcli workspace secret set REF_API_KEY=[YOUR_API_KEY]

ntcli server deploy ref-tools-mcp

That’s it. Ref is now running in your NimbleTools workspace.

Step 4: Test It Out (Quick CLI Check)

ntcli mcp call ref-tools-mcp ref_search_documentation \

query="EC2 launch instance with IMDSv2 required boto3 Python"

You’ll get back raw, structured search results like this:

overview: page='Amazon EC2 examples - Boto3 1.39.14 documentation'

section='unable-to-save-cookie-preferences > amazon-ec2-examples'

url: https://boto3.amazonaws.com/v1/documentation/api/latest/guide/ec2-examples.html#amazon-ec2-examples

moduleId: boto3

overview: page='EC2 - Boto3 1.39.14 documentation' section='unable-to-save-cookie-preferences > furo-main-content > ec2 > client'

url: https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/ec2.html#client

moduleId: boto3

overview: This page provides code examples and instructions on how to use the `RunInstances` API with various AWS SDKs and the AWS CLI to launch Amazon EC2 instances. It includes examples in multiple programming languages such as .NET, Bash, C++, Java, JavaScript, Kotlin, PowerShell, Python, Rust, and SAP ABAP, along with CLI examples demonstrating different use cases.

url: https://docs.aws.amazon.com/ec2/latest/devguide/example_ec2_RunInstances_section.html

moduleId: amazon-ec2

overview: page='Amazon EC2 - Boto3 1.39.14 documentation'

section='unable-to-save-cookie-preferences > furo-main-content > amazon-ec2 > launching-new-instances'

url: https://boto3.amazonaws.com/v1/documentation/api/latest/guide/migrationec2.html#launching-new-instances

moduleId: boto3

Alright! Boto 1.39 is recent. API links look real… this is good. But not really helpful to me in this format.

That’s because this format makes it easy for your agent (not you) to find what it needs. Ref is using the full MCP spec here to provide structured data - URLs, module IDs, and context - that agents can parse immediately.

Step 5: Connect Ref to Claude Desktop

NimbleTools provides a convenient command to generate a config for Claude Desktop:

ntcli server config ref-tools-mcp

You’ll see output like this:

{

"mcpServers": {

"ref-tools-mcp": {

"command": "npx",

"args": [

"@nimbletools/mcp-http-bridge",

"--endpoint",

"https://mcp.nimbletools.ai/17b161fd-5142-4c1a-bf33-1e4a49220c52/ref-tools-mcp/mcp",

"--token",

"eyJhbGci....."

],

"auth": null,

"oauth": false

}

}

}

Copy the mcpServers section into your Claude Desktop MCP configuration file.

Restart Claude Desktop - it will now have direct access to Ref through your NimbleTools deployment.

Tip: Open Claude settings → MCP Tools to verify that

ref-tools-mcpis active.

Step 6: See the Magic in Action

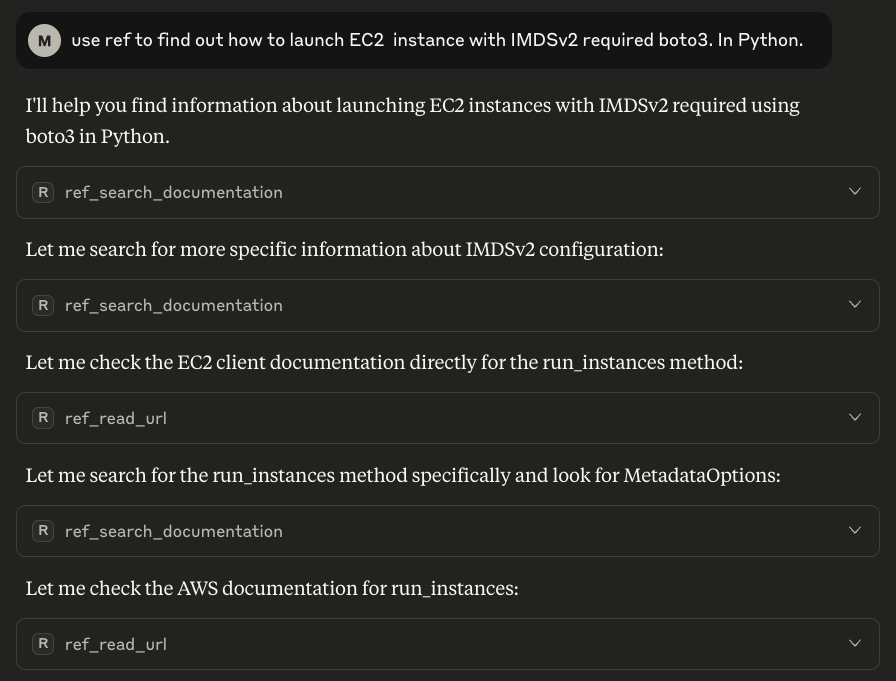

Now let’s ask Claude to solve our original problem. Here’s what happens when I ask about launching EC2 with IMDSv2.

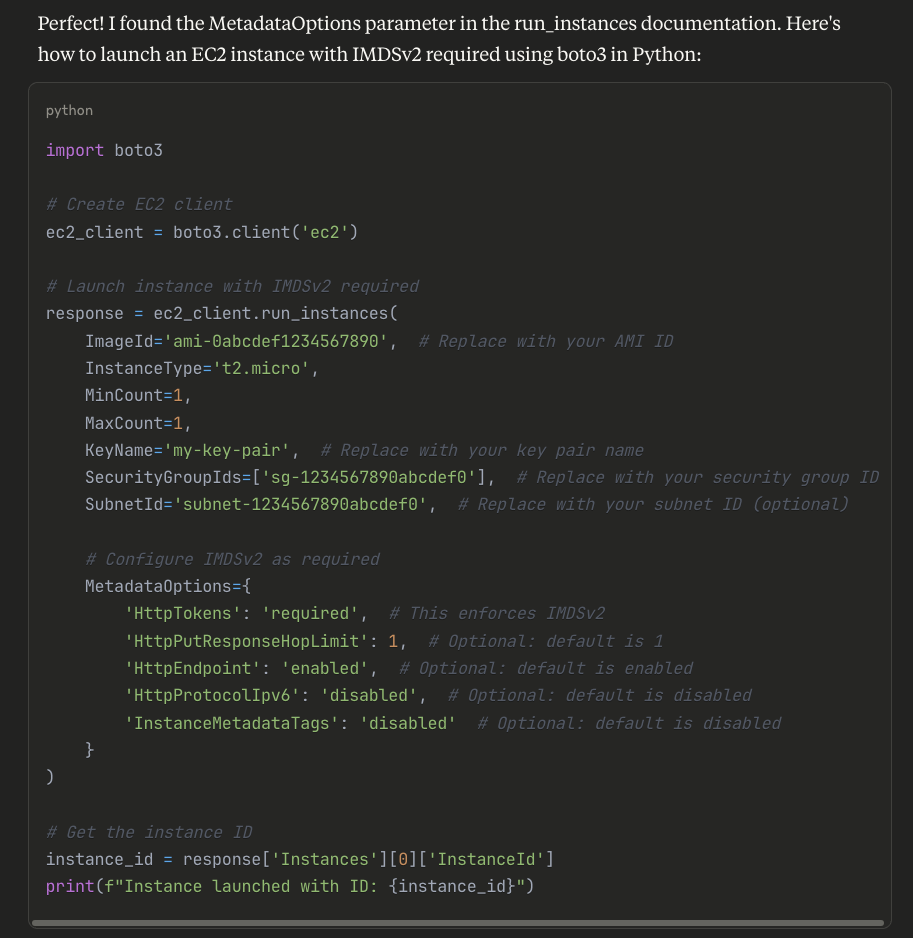

And after a few tool calls and MCP magic.. we get the answer we’re looking for!

Notice what Claude found that my original AI missed:

- The required

MetadataOptionsparameter - All four metadata settings (not just

HttpTokens) - Current parameter names and values

- Even included helpful comments

This isn’t a guess or amalgamation of old examples. It’s the exact, current pattern from AWS’s official boto3 documentation.

What Makes Ref Different

After a week of using it, here’s what I’ve noticed:

-

Purpose-Built for Technical Docs: This isn’t general search with some filters. Ref was built specifically for technical documentation, and it shows.

-

Smart Crawling: It handles tabbed code examples correctly. If docs show Python/Java/Node tabs, you get the Python snippet when you ask for Python. Sounds obvious, but try getting this from other search tools. Their custom crawler understands technical documentation structure in ways generic crawlers miss.

-

Always Current: Ref continuously crawls official sources. When AWS updates their docs, Ref knows within hours.

-

AI-First Design: Returns structured data that AI agents can immediately use. No parsing HTML or extracting code from prose.

Real Results From My Testing

I ran some benchmarks. Same queries to my RAG system vs. Ref via NimbleTools:

Query: “How to launch EC2 with IMDSv2 enforcement”

Traditional RAG:

- 15 retrieved chunks

- 3 different approaches (conflicting)

- 50% outdated information

- 47,000 tokens used

- Required manual verification

Ref via MCP:

- 2 authoritative sources

- Current implementation only

- Included breaking changes warning

- 3,200 tokens used

- Copy-paste ready

The token savings alone justify the switch.

What’s happening here? Ref finds exactly the context my agent needs without adding junk that wastes the context window. No more sifting through 15 tangentially related chunks just to find the one line that matters.

The Dashboard Is Actually Useful

Every search through Ref shows up in their dashboard:

- See exactly what your agents searched for

- Track which docs were pulled

- Monitor token usage

- Verify sources for any answer

This transparency matters when you need to audit where your AI’s advice came from.

Beyond Basic Search: What’s Possible

Since Ref is just another MCP service in NimbleTools, you can compose it with other tools:

Example workflow I built:

- Ref finds the current EC2 launch pattern

- Another MCP service pulls our internal instance configurations

- A third service validates against our security policies

- Agent combines all three to generate compliant, current code

The beauty of MCP is that each service does one thing well.

Why This Stack Makes Sense

I discovered Ref while looking for better search tools for my AI agents. The fact that it was already MCP-compatible meant I could deploy it on NimbleTools immediately.

No integration work. No custom adapters. Just deploy and use.

That’s the power of standards like MCP - tools that follow the spec just work together.

Try It Yourself

You can try this integration right now. Both tools have free tiers, so there’s no risk:

- Set up your NimbleTools workspace

- Get a Ref API key

- Deploy using the commands above

- Query something that usually returns outdated info

When you see current, accurate code instead of the usual chaos, you’ll understand why I’m excited about this combination.

The Bottom Line

Stop feeding your AI agents garbage and expecting gold. Ref gives them exactly what they need: current, authoritative, structured documentation. NimbleTools makes it trivial to deploy.

Two tools. One standard (MCP). Zero integration headaches.

Your AI agents deserve better than outdated Stack Overflow posts. This is how you give it to them.

Special thanks to Matt Dailey for building Ref - it’s exactly what I’d been looking for. If you’re building MCP-compatible tools, reach out. I’m always looking for quality services to deploy on NimbleTools.

Developers: The Ref MCP implementation is open source on GitHub. It’s a great example of a well-built MCP server.

P.S. Have questions about deploying MCP services on NimbleTools? We host weekly office hours where we help folks get started and troubleshoot deployments. Sign up here - it’s the fastest way to get unstuck!